Abstract

The relentless pursuit of enhanced building energy performance stands as a critical imperative in mitigating climate change and fostering sustainable urban development. This endeavor is inherently complex, demanding the synergistic integration of sophisticated computational paradigms, exhaustive data analytics, and avant-garde modeling methodologies. This research report comprehensively elucidates the conceptual framework and practical implications of End-to-End Deep Meta Modeling (E2DMM), a groundbreaking holistic methodology. E2DMM intricately weaves together cutting-edge deep learning algorithms, advanced digital twin technology, and vast, multidimensional datasets to achieve unprecedented levels of energy efficiency and occupant comfort within building environments. Through a meticulous examination of the constituent computational techniques, the intricate processes involved in the construction and dynamic utilization of digital twins, the pivotal role played by expansive datasets, and the application of specialized software and tools, this report furnishes an in-depth analysis of E2DMM’s transformative potential. It posits E2DMM as a revolutionary force capable of fundamentally reshaping current paradigms of building energy optimization. Furthermore, detailed case studies are meticulously presented to concretely illustrate the practical implementation of E2DMM, spanning from initial conceptual design phases through to continuous, real-time operational optimization strategies.

Many thanks to our sponsor Focus 360 Energy who helped us prepare this research report.

1. Introduction

The escalating global concerns surrounding climate change, coupled with the urgent mandate to curtail energy consumption across all sectors, have thrust the building sector into a critical juncture, necessitating the identification and deployment of profoundly innovative solutions for energy optimization. Buildings account for approximately 40% of global energy consumption and 36% of energy-related carbon dioxide emissions in the European Union, underscoring their significant environmental footprint and the immense potential for improvement (energyinformatics.springeropen.com). Traditional approaches to building energy management, often reliant on static models, rule-based control systems, and fragmented data analysis, frequently prove inadequate in grappling with the inherent complexity, dynamism, and stochastic nature of modern building energy systems. These systems are characterized by intricate interdependencies between architectural design, material properties, HVAC (Heating, Ventilation, and Air Conditioning) operation, lighting, occupant behavior, and fluctuating external environmental conditions. The limitations of conventional methods manifest in sub-optimal energy performance, compromised occupant comfort, and an inability to adapt proactively to changing operational demands or environmental shifts.

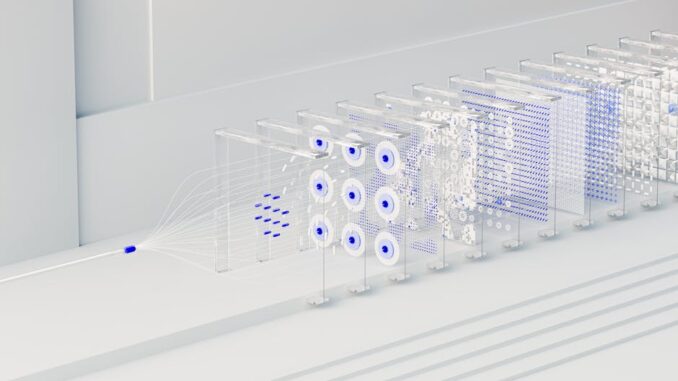

The advent of End-to-End Deep Meta Modeling (E2DMM) emerges as a profoundly promising and transformative avenue. E2DMM represents a paradigm shift by integrating the formidable predictive and pattern recognition capabilities of deep learning techniques with the real-time simulation and analytical power of digital twin technology, all underpinned by the strategic leverage of extensive, diverse datasets. This synergistic amalgamation allows for the comprehensive modeling and optimization of building energy performance across its entire lifecycle. The ‘End-to-End’ aspect signifies a holistic approach, encompassing data acquisition, model development, simulation, analysis, optimization, and control within a unified framework. The ‘Deep’ element refers to the utilization of multi-layered neural networks capable of learning hierarchical representations from raw data. Crucially, the ‘Meta Modeling’ component transcends mere individual system modeling; it denotes the creation of models that can learn from and adapt to various sub-models or processes, effectively optimizing the optimization process itself, enabling a more adaptive and resilient control strategy for complex building ecosystems. This report systematically explores the theoretical foundations, practical implementations, and profound implications of E2DMM in pioneering a new era of intelligent, energy-efficient, and human-centric buildings.

Many thanks to our sponsor Focus 360 Energy who helped us prepare this research report.

2. Computational Techniques in E2DMM

E2DMM is founded upon a sophisticated blend of advanced computational techniques, each contributing distinct capabilities to the overarching goal of comprehensive building energy optimization.

2.1 Deep Learning Algorithms

Deep learning, a highly influential subset of machine learning, distinguishes itself through the employment of artificial neural networks (ANNs) characterized by multiple hidden layers. These layers enable the models to automatically learn hierarchical features and increasingly abstract representations from vast quantities of raw data, thereby uncovering complex patterns that are often intractable for traditional statistical methods. In the context of building energy optimization, deep learning algorithms offer robust capabilities for tasks such such as predictive modeling of energy consumption, the identification of subtle inefficiencies, anomaly detection, and the proactive suggestion of optimized operational strategies. The versatility of deep learning is evident in the diverse array of architectures applicable to building energy challenges:

-

Recurrent Neural Networks (RNNs): RNNs, particularly their more advanced variants like Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs), are exceptionally well-suited for processing sequential data. Building energy consumption time series data, which exhibits strong temporal dependencies (e.g., energy use at 3 PM is correlated with use at 2 PM and 4 PM, as well as the previous day’s patterns), benefits significantly from RNNs. They can capture these intricate temporal dynamics, allowing for highly accurate predictions of future energy loads. For instance, LSTMs have been effectively utilized to predict short-term and long-term building energy consumption by adeptly capturing the influence of past energy usage, occupancy schedules, and fluctuating weather conditions (arxiv.org). The ability of LSTMs to mitigate the vanishing gradient problem, which plagues simpler RNNs, makes them particularly robust for longer sequences of energy data.

-

Convolutional Neural Networks (CNNs): While primarily recognized for their success in image processing, CNNs also find application in building energy analytics. They can be employed to analyze spatial data, such as thermal images of building facades to detect heat loss or gain, or to identify spatial patterns in sensor data within large buildings. Furthermore, 1D CNNs can be applied to time-series data, acting as powerful feature extractors for various energy-related prediction tasks, identifying local patterns in energy load profiles that might indicate specific operational events or anomalies.

-

Transformer Networks: Originally developed for natural language processing, Transformer networks have demonstrated exceptional capabilities in handling long-range dependencies in sequential data, surpassing the performance of traditional RNNs in many scenarios. Their self-attention mechanism allows them to weigh the importance of different parts of an input sequence, making them highly effective for forecasting building energy consumption over extended periods, where influences from distant past events might be critical.

-

Graph Neural Networks (GNNs): Buildings can be conceptualized as complex interconnected systems, where various components (e.g., HVAC zones, sensors, equipment) form a graph. GNNs are uniquely positioned to model these relationships, enabling analysis of energy flow, fault propagation, and interdependent control strategies within a building’s ecosystem. For example, a GNN could model the heat transfer between different thermal zones or the operational states of networked HVAC components.

2.2 Digital Twin Technology

A digital twin is not merely a static model but a dynamic, virtual counterpart of a physical entity, system, or process, maintained in real-time synchronization through data exchange. This concept fundamentally bridges the physical and cyber worlds. In the domain of building energy optimization, digital twins offer an unprecedented level of insight and control. They integrate real-time operational data streaming from a multitude of sensors, building management systems (BMS), and external sources (like weather forecasts) into a high-fidelity virtual model of the physical building. This continuous data flow enables the digital twin to accurately reflect the current state, behavior, and performance of its physical counterpart.

The utility of a building digital twin extends far beyond simple monitoring. It acts as a living, evolving model that facilitates:

-

Predictive Maintenance: By analyzing operational data and applying predictive algorithms, the digital twin can foresee potential equipment failures or performance degradation (e.g., an HVAC unit drawing more power than expected for current conditions), allowing for proactive maintenance before costly breakdowns occur.

-

Performance Optimization: Through real-time simulation and scenario analysis, the digital twin can evaluate the impact of various operational adjustments (e.g., changing thermostat setpoints, adjusting ventilation rates, rescheduling equipment operation) on energy consumption and occupant comfort, identifying optimal control strategies.

-

Scenario Analysis and What-If Simulations: Architects, engineers, and facility managers can use the digital twin to test hypothetical scenarios without impacting the physical building. This includes evaluating the energy implications of adding new equipment, changing building envelopes, or even responding to extreme weather events.

-

Fault Detection and Diagnostics (FDD): Deviations between the digital twin’s predicted performance and the physical building’s actual performance can quickly signal anomalies or faults, enabling rapid identification and diagnosis of issues ranging from sensor malfunctions to equipment failures.

-

Lifecycle Management: From design and construction through operation and eventual decommissioning, the digital twin can serve as a comprehensive information repository and analysis tool, supporting decision-making across the entire building lifecycle. It can track historical performance, document changes, and inform future renovations or re-commissioning efforts (nyuscholars.nyu.edu).

The fidelity and complexity of digital twins can vary, ranging from simple component-level twins to comprehensive building-level or even district-level twins. E2DMM often necessitates high-fidelity digital twins capable of capturing intricate energy dynamics.

2.3 Hybrid Models (Physics-Informed AI)

Purely data-driven deep learning models, while powerful, can sometimes behave as ‘black boxes.’ They may lack interpretability, require massive amounts of training data, and struggle with generalization outside the distribution of their training data. Conversely, traditional physics-based models (e.g., thermodynamic equations) offer strong interpretability and adherence to fundamental laws but can be computationally intensive and challenging to parameterize accurately due to unknown system properties or dynamic conditions.

Hydrid models, particularly Physics-Informed Neural Networks (PINNs), elegantly combine the strengths of deep learning with the robustness of physics-based modeling. PINNs embed known physical laws, expressed as partial differential equations (PDEs) or algebraic constraints, directly into the neural network’s architecture or its loss function. This innovative approach ensures that the model’s predictions not only fit the available data but also strictly adhere to the underlying physical principles governing the system. This leads to several significant advantages:

-

Enhanced Accuracy and Interpretability: By grounding the learning process in physical laws, PINNs produce more physically consistent and accurate predictions, especially in situations with sparse or noisy data. The explicit inclusion of physical equations also makes the model’s behavior more transparent and interpretable.

-

Reduced Data Requirements: PINNs can learn effectively from smaller datasets because the physics constraints act as a powerful form of regularization, guiding the network’s learning process and preventing overfitting.

-

Improved Generalization: Adherence to physical laws allows PINNs to generalize better to unseen conditions or scenarios that deviate from the training data, a crucial aspect for dynamic building environments.

-

Suitability for Complex Systems: For complex systems like building energy performance, involving intricate heat transfer, fluid dynamics, and energy conversion processes, PINNs offer a robust framework. They can model heat flow through building envelopes, air movement within zones, or the performance of HVAC components while respecting conservation laws (e.g., energy conservation, mass conservation) (arxiv.org). For example, PINNs can predict transient temperature profiles within building walls by solving the heat equation directly within the neural network, simultaneously learning thermal properties from temperature sensor data.

In E2DMM, the integration of PINNs within the digital twin framework ensures that simulations are not only data-driven but also physically realistic, providing a higher level of trust and reliability for optimization and control strategies.

Many thanks to our sponsor Focus 360 Energy who helped us prepare this research report.

3. Construction and Utilization of Digital Twins

The successful implementation of E2DMM hinges critically on the meticulous construction, rigorous validation, and dynamic utilization of high-fidelity digital twins. This process involves multiple interconnected stages.

3.1 Data Collection and Integration

The foundation of any robust digital twin is comprehensive and high-quality data. The initial phase involves the systematic collection of diverse data types that collectively represent the physical building’s characteristics and operational behaviors. This includes:

-

Static Building Information: This encompasses architectural designs (CAD drawings, BIM models), structural specifications, material properties (thermal conductivity, specific heat capacity, density, emissivity, solar absorptivity of walls, roofs, windows), equipment specifications (HVAC nominal capacities, efficiencies, control logic), and construction schedules. Building Information Modeling (BIM) data is particularly valuable as it provides a geometrically and semantically rich foundation for the digital twin, often containing inherent relationships between building elements (iesve.com).

-

Real-time Sensor Data: Continuous streams of data from various sensors installed throughout the building are paramount. This includes indoor and outdoor temperature, humidity, CO₂ levels, occupancy detection (motion sensors, passive infrared, camera-based), light levels, air flow rates, pressure differentials, and electrical power consumption (at the building, system, and sometimes individual equipment level). Sensor networks must be strategically deployed to provide sufficient spatial and temporal granularity.

-

Building Management System (BMS) Data: BMS platforms collect vast amounts of operational data, including equipment runtimes, control setpoints, valve positions, fan speeds, fault alarms, and operational schedules. This data provides insights into the actual control strategies being implemented and equipment performance under various conditions.

-

Environmental Data: External climate data, encompassing ambient temperature, relative humidity, solar radiation (global horizontal, direct normal, diffuse), wind speed and direction, and precipitation, significantly impacts building energy loads. Historical weather data and real-time forecasts are crucial inputs.

-

Occupancy and User Behavior Data: Beyond simple presence detection, understanding detailed occupancy patterns (number of occupants, their movements, activities, and schedules) is vital. This can be derived from various sources, including access card data, Wi-Fi network analysis, or even anonymized camera feeds, adhering strictly to privacy protocols. User feedback, gathered through surveys or integrated comfort apps, provides qualitative insights into comfort preferences.

Once collected, this heterogeneous data must be integrated into a unified data model, often requiring sophisticated data parsing, cleaning, normalization, and semantic mapping. Interoperability challenges arise due to disparate data formats and communication protocols (e.g., BACnet, Modbus, OPC UA). Standardization efforts like Brick Schema and Project Haystack aim to provide a common language for building data, facilitating seamless integration and semantic understanding across different systems and platforms.

3.2 Modeling and Simulation

With the integrated data, the next step involves creating the virtual model that forms the core of the digital twin. This often entails leveraging established Building Performance Simulation (BPS) software or developing custom models based on the gathered data. The modeling process involves:

-

Geometric and Material Modeling: Utilizing BIM data or CAD models, a precise 3D geometric representation of the building is created. Material properties are assigned to each building component (walls, windows, roof, floor) to accurately model heat transfer dynamics.

-

System Modeling: HVAC systems (boilers, chillers, air handling units, distribution networks), lighting systems, and other energy-consuming equipment are modeled based on their specifications and control logic. This involves defining their performance curves, operational schedules, and interconnections.

-

Behavioral Modeling: This includes modeling occupancy schedules, internal heat gains from occupants and equipment, lighting usage patterns, and ventilation strategies. The dynamic nature of occupant behavior is often approximated or modeled stochastically based on historical data.

-

Environmental Context: The model incorporates external climate data to simulate solar gains, convective and radiative heat losses, and infiltration rates. Location-specific solar angles and sky conditions are calculated.

Once the virtual model is established, it is used for continuous simulation. This can involve:

-

Forward Simulation: Predicting future energy consumption and indoor conditions based on current states, control inputs, and forecasted environmental conditions.

-

Inverse Modeling: Inferring unmeasured parameters or identifying anomalies by comparing simulation outputs with actual sensor data and adjusting model inputs until a match is achieved.

-

Co-simulation: Integrating different simulation engines (e.g., a thermal simulation engine with an HVAC control logic simulator) to handle complex interactions between building physics and system controls.

3.3 Calibration and Validation

Crucially, a digital twin must be rigorously calibrated and validated to ensure its accuracy and reliability as a predictive tool. Without this step, the twin remains a theoretical model rather than a faithful real-time representation. Calibration involves fine-tuning the model’s parameters to align its simulated outputs with the actual measured performance of the physical building. This iterative process often utilizes optimization algorithms to minimize the discrepancy between simulated and measured values.

Key aspects of calibration and validation include:

-

Parameter Adjustment: Modifying uncertain model inputs, such as material thermal properties, equipment efficiencies (e.g., HVAC COP), infiltration rates, or occupancy schedules, within reasonable bounds until the model’s predictions closely match the observed data.

-

Sensitivity Analysis: Identifying which input parameters have the most significant impact on the model’s outputs helps focus calibration efforts on the most influential variables.

-

Validation Metrics: The accuracy of the calibrated digital twin is typically assessed using standard metrics like the Normalized Mean Bias Error (NMBE) and Coefficient of Variation of the Root Mean Square Error (CV(RMSE)), often benchmarked against guidelines from organizations like ASHRAE (American Society of Heating, Refrigerating and Air-Conditioning Engineers) or IPMVP (International Performance Measurement and Verification Protocol). For instance, ASHRAE Guideline 14 recommends CVRMSE values below 30% for hourly data and NMBE values below 10% for monthly data to consider a model calibrated.

-

Continuous Re-calibration: Buildings are dynamic entities that change over time (e.g., material degradation, equipment wear, changes in occupancy patterns). Therefore, digital twins require continuous monitoring and periodic re-calibration to maintain their accuracy and relevance. This iterative refinement process ensures the digital twin remains a ‘living’ model.

3.4 Real-Time Monitoring and Control

Once calibrated and validated, the digital twin becomes a powerful tool for real-time monitoring, analysis, and control. It acts as the central intelligence hub for building operations:

-

Anomaly Detection and Fault Diagnosis: By continuously comparing real-time sensor data with the digital twin’s predicted performance, deviations can be rapidly detected. Machine learning algorithms can then be applied to identify the root cause of these anomalies (e.g., a faulty sensor, a malfunctioning damper, an inefficient chiller), enabling proactive fault diagnosis and maintenance scheduling.

-

Predictive Control Strategies: The digital twin can serve as the core of a Model Predictive Control (MPC) system. MPC algorithms use the digital twin to forecast future building performance (e.g., thermal response, energy demand) based on predicted external conditions and desired internal setpoints. It then optimizes control actions over a rolling horizon, minimizing energy consumption while maintaining occupant comfort and adhering to operational constraints. For example, an MPC system could pre-cool a building during off-peak electricity hours or use thermal mass strategically to shift energy loads.

-

Optimization of Operational Parameters: The digital twin facilitates the dynamic adjustment of various operational parameters, such as HVAC setpoints, lighting schedules, ventilation rates, and equipment staging, in real-time. This optimizes energy usage in response to changing occupancy, weather, and energy tariffs.

-

Human-in-the-Loop Control and Feedback Loops: While autonomous control is a goal, the digital twin often provides actionable insights to human operators, who can then make informed decisions. Furthermore, occupant feedback mechanisms (e.g., via mobile apps for comfort settings) can be integrated into the digital twin’s control logic, creating adaptive and personalized environments. This feedback forms a crucial part of the continuous learning and improvement cycle for the digital twin.

-

Visualization and Reporting: Digital twin platforms typically include advanced visualization tools that present complex operational data and simulation results in intuitive dashboards, empowering facility managers to understand building performance at a glance and identify areas for improvement.

Many thanks to our sponsor Focus 360 Energy who helped us prepare this research report.

4. Role of Vast Datasets

The efficacy of E2DMM is fundamentally predicated on the availability, quality, and comprehensive nature of vast, diverse datasets. These datasets serve as the fuel for deep learning algorithms and the bedrock for constructing and validating digital twins. The granularity, temporal resolution, and spatial coverage of these data types directly impact the accuracy and robustness of the E2DMM framework.

4.1 Climate Data

Accurate and granular climate data is absolutely essential for simulating and predicting building energy performance. Buildings are open systems, constantly interacting with their external environment. Key climate variables include:

-

Temperature and Humidity: Ambient dry-bulb temperature and relative humidity drive heat transfer through the building envelope and dictate cooling/heating loads. Historical hourly or sub-hourly data are needed for model calibration, while real-time forecasts are critical for predictive control.

-

Solar Radiation: Direct, diffuse, and global horizontal solar radiation significantly impacts cooling loads (solar heat gain through windows) and can be harnessed for passive heating or active solar energy systems. Data from local weather stations, satellite measurements, and meteorological models provide necessary inputs.

-

Wind Speed and Direction: Wind affects convective heat transfer across building surfaces, influences infiltration rates, and can impact the performance of natural ventilation systems.

-

Precipitation: While less directly impacting energy consumption on an hourly basis, rain and snow can affect surface properties, solar reflectance, and operational schedules.

Sources for climate data include governmental meteorological agencies, local airport weather stations, private weather data providers, and specialized climate files (e.g., TMY – Typical Meteorological Year, or EPW – EnergyPlus Weather files) which represent typical rather than extreme conditions. The challenge lies in obtaining geographically representative data, especially in urban microclimates where local conditions can significantly differ from regional weather stations.

4.2 Material Properties

Detailed and accurate information regarding the thermal and optical properties of building materials is paramount for realistic energy modeling. These properties govern how heat is transferred, stored, and reflected within the building structure and envelope. Key material properties include:

-

Thermal Conductivity (k-value): Measures a material’s ability to conduct heat. Critical for calculating heat transfer through walls, roofs, and floors.

-

Specific Heat Capacity (c_p): Represents the amount of heat energy required to raise the temperature of a unit mass of the material by one degree. Influences the thermal mass effect – a material’s ability to store and release heat, dampening indoor temperature fluctuations.

-

Density (ρ): Combined with specific heat, determines the volumetric heat capacity, crucial for transient thermal simulations.

-

U-value (Overall Heat Transfer Coefficient): For composite elements like walls or windows, the U-value quantifies the rate of heat transfer per unit area per unit temperature difference. Lower U-values indicate better insulation.

-

Solar Absorptance and Emissivity: These properties dictate how much solar radiation a surface absorbs versus reflects, and how effectively it radiates heat away. Critical for external surfaces and glazing.

-

Shading Coefficients and Visible Transmittance: For windows, these quantify how much solar heat and visible light are transmitted into the building, respectively.

Data for material properties are typically sourced from manufacturer specifications, engineering handbooks, building codes, and standardized material databases. However, actual in-situ properties can deviate due to installation quality, degradation over time, or variations in manufacturing batches. Non-destructive testing methods can sometimes be used to infer actual properties post-construction.

4.3 Occupancy Data

Human presence and activities constitute one of the most dynamic and influential factors affecting building energy consumption. Understanding occupancy patterns is vital for accurate predictions of internal heat gains, fresh air requirements, and lighting loads. Relevant occupancy data includes:

-

Occupant Count: The number of people present in specific zones at different times. Each occupant contributes sensible and latent heat (metabolic heat gains) and requires ventilation.

-

Occupancy Schedules: Typical daily and weekly schedules for different zones (e.g., office hours, meeting room usage, classroom schedules).

-

Activity Levels: The type of activities occupants are engaged in (e.g., sedentary, light work, intense exercise) influences their metabolic heat generation.

-

Occupant Behavior: How occupants interact with building controls (e.g., adjusting thermostats, opening windows, switching lights on/off) significantly impacts energy use. This can be complex and often non-rational.

Occupancy data can be collected through various methods: motion detectors (PIR sensors), CO₂ sensors (indirectly indicating occupancy and ventilation needs), Wi-Fi signal triangulation, access card logs, and even anonymized image processing or depth cameras for more granular people counting. The challenge lies in acquiring sufficiently granular and representative data while respecting privacy concerns.

4.4 Sensor Data

Real-time sensor data forms the critical link between the physical building and its digital twin, enabling dynamic monitoring and control. This data stream captures the operational performance of various building systems and the environmental conditions within. Key types of sensor data include:

-

HVAC System Data: Supply and return air temperatures, coil temperatures, chilled water/hot water flow rates and temperatures, fan speeds, damper positions, pump statuses, chiller power consumption, boiler fuel consumption, and pressure differentials. These provide insight into the performance and efficiency of heating, cooling, and ventilation equipment.

-

Lighting System Data: Light levels (lux sensors), lighting power consumption, and occupancy-based or daylight-harvesting control statuses.

-

Electrical System Data: Whole-building electricity consumption (from smart meters), sub-metered data for specific systems (e.g., IT loads, plug loads), voltage, current, and power factor. These data are crucial for understanding electricity demand profiles and identifying peak loads.

-

Indoor Environmental Quality (IEQ) Data: Beyond temperature and humidity, sensors for CO₂, volatile organic compounds (VOCs), and particulate matter (PM2.5, PM10) provide a comprehensive picture of indoor air quality, which is intrinsically linked to occupant comfort and health, and therefore ventilation energy use.

-

Water Usage Data: Flow meters for domestic hot water, cooling towers, and irrigation systems provide insights into water consumption, which has an embedded energy footprint.

The reliability, accuracy, and sampling rate of sensor data are paramount. Issues like sensor drift, calibration errors, and data transmission failures must be addressed through robust data validation and cleaning pipelines. High-frequency data (e.g., minute-by-minute) is often required for real-time control and rapid anomaly detection.

4.5 Building Management System (BMS) Data

BMS platforms are the nerve centers of modern buildings, orchestrating the operation of various subsystems. The historical and real-time data residing within the BMS are invaluable for E2DMM. This includes:

-

Control Schedules: Setpoints for temperature, lighting, ventilation based on time-of-day or day-of-week.

-

Operational Modes: Information on whether systems are in occupied, unoccupied, setback, or optimal start/stop modes.

-

Alarms and Fault Logs: Records of system malfunctions, overridden controls, or critical events, which are crucial for FDD and understanding system reliability.

-

Historical Trends: Long-term archives of sensor readings and control commands, offering a rich context for understanding past building performance and learning operational patterns.

Integrating BMS data often involves navigating proprietary protocols and data formats, requiring specialized gateways and APIs to extract and normalize the information for the digital twin.

4.6 Energy Bills and Smart Meter Data

While potentially less granular than real-time sensor data, historical energy bills and smart meter data provide the definitive record of energy consumption and cost. These datasets are essential for:

-

Baseline Performance: Establishing a baseline against which optimization efforts can be measured.

-

Cost Analysis: Understanding energy expenditures and the financial impact of optimization strategies.

-

Peak Demand Analysis: Identifying periods of high electricity demand, which often incur significant charges, informing strategies for peak shaving or load shifting.

-

Tariff Structures: Incorporating dynamic pricing, time-of-use (TOU) rates, or demand charges into optimization algorithms to minimize operational costs.

Smart meters typically provide interval data (e.g., 15-minute or hourly), offering a more detailed consumption profile than monthly utility bills.

Many thanks to our sponsor Focus 360 Energy who helped us prepare this research report.

5. Software and Tools for E2DMM

The implementation of E2DMM relies on a sophisticated ecosystem of software and tools, each specializing in different aspects of modeling, simulation, machine learning, and data management. The successful integration and interoperability of these tools are paramount.

5.1 Building Performance Simulation Software

These tools are foundational for creating and validating the physics-based components of a digital twin. They model the thermal, lighting, and ventilation dynamics of a building with high fidelity.

-

EnergyPlus: Developed by the U.S. Department of Energy (DOE), EnergyPlus is an open-source, whole-building energy simulation program that models energy consumption for heating, cooling, lighting, ventilation, and other energy flows. It uses a heat balance method to calculate zone temperatures and loads, accounting for complex interactions between building envelope, HVAC systems, and internal loads. Its capabilities include detailed HVAC component modeling, hourly or sub-hourly simulations, and advanced features like natural ventilation and radiant heating/cooling. EnergyPlus is often used for creating the core physics model within a digital twin, against which real-time data is compared and optimization scenarios are run (en.wikipedia.org).

-

TRNSYS (Transient System Simulation Tool): A modular simulation program primarily used for transient thermal performance analysis of systems. TRNSYS is highly flexible, allowing users to build complex energy systems from individual components (e.g., solar collectors, storage tanks, heat pumps, building zones). Its modular nature makes it suitable for modeling innovative or unconventional building systems and for co-simulation with external control algorithms or machine learning models.

-

IDA ICE (Indoor Climate and Energy): A comprehensive simulation program known for its user-friendly interface and detailed modeling of indoor climate and energy use. It excels in simulating complex HVAC systems and advanced control strategies, making it a powerful tool for detailed performance analysis and optimization.

-

IESVE (Integrated Environmental Solutions Virtual Environment): A suite of integrated tools for building performance analysis, covering energy, daylight, CFD (Computational Fluid Dynamics), and comfort. It provides robust capabilities for early-stage design analysis through to detailed operational modeling, facilitating the creation of comprehensive building digital twins.

These BPS tools are often used in conjunction with deep learning models, where the BPS model provides the physical context and high-fidelity simulation environment, and the deep learning model handles pattern recognition, prediction, and optimization of control strategies.

5.2 Machine Learning Frameworks

These frameworks provide the essential infrastructure for developing, training, and deploying deep learning algorithms integral to E2DMM.

-

TensorFlow: An open-source, end-to-end machine learning platform developed by Google. It offers a comprehensive ecosystem of tools, libraries, and community resources for building and deploying ML-powered applications. TensorFlow is highly scalable, supporting deployment across various platforms (CPUs, GPUs, TPUs, mobile, edge devices), making it suitable for both complex model training and real-time inference within building control systems.

-

PyTorch: Another popular open-source machine learning library developed by Facebook AI Research. PyTorch is known for its flexibility, Python-first approach, and dynamic computational graph, which facilitates rapid prototyping and experimentation. It is widely favored in academic research and increasingly in industry for its ease of use in developing complex deep learning models like RNNs, LSTMs, and Transformers for energy forecasting and anomaly detection.

-

Scikit-learn: While not a deep learning framework, Scikit-learn is a widely used Python library for traditional machine learning algorithms (e.g., regression, classification, clustering). It plays a crucial role in data pre-processing, feature engineering, and for simpler predictive models or baselining comparisons within E2DMM.

These frameworks provide the mathematical primitives and optimization routines necessary to train the deep learning components that predict energy loads, optimize control parameters, or detect faults within the E2DMM architecture.

5.3 Digital Twin Platforms

Dedicated digital twin platforms offer integrated environments for the creation, management, and scaling of digital twins. They provide essential services for data ingestion, real-time analytics, visualization, and API integration.

-

Siemens MindSphere: An industrial IoT as a service solution that provides a cloud-based operating system for creating and operating digital twins. It offers robust connectivity, data management, advanced analytics, and application development capabilities, enabling comprehensive lifecycle management for assets and systems, including buildings.

-

IBM Watson IoT Platform: A cloud-based service that allows organizations to connect, manage, and analyze data from IoT devices. It provides tools for device registration, data ingestion, real-time analytics, and integration with other IBM Watson AI services, which can be leveraged for advanced building energy insights.

-

Microsoft Azure Digital Twins: A Platform as a Service (PaaS) offering that allows users to create comprehensive models of physical environments. It provides a graph-based modeling language to define entities and their relationships, enabling the creation of digital twins that represent entire buildings, campuses, or even cities. Azure Digital Twins supports real-time data integration, advanced analytics, and custom application development through its APIs.

-

Dassault Systèmes 3DEXPERIENCE Platform: A business experience platform providing a collaborative environment for designing, simulating, and managing products and experiences. While broader in scope, its capabilities for creating virtual representations and simulations of complex systems are highly relevant for detailed building digital twins, especially for integrating BIM data and advanced simulation.

These platforms provide the backbone for integrating disparate data sources, running real-time simulations, deploying machine learning models, and visualizing building performance, thereby forming the operational core of E2DMM.

5.4 Data Management and Visualization Tools

Effective E2DMM requires robust data infrastructure and intuitive visualization capabilities.

-

Databases: Various database types are essential: SQL databases (e.g., PostgreSQL, MySQL) for structured building information; NoSQL databases (e.g., MongoDB, Cassandra) for flexible storage of diverse data types; and crucially, Time-Series Databases (e.g., InfluxDB, TimescaleDB) optimized for storing and querying high-volume, time-stamped sensor data with high efficiency.

-

ETL (Extract, Transform, Load) Tools: Tools like Apache NiFi, Talend, or custom Python scripts are used to extract data from various sources, transform it into a consistent format, clean it, and load it into the digital twin’s data store or ML model inputs. This addresses the significant challenge of data heterogeneity.

-

Dashboards and Visualization Platforms: Tools like Grafana, Tableau, or custom web-based dashboards are used to present real-time and historical building performance data, energy consumption trends, predicted values, optimization recommendations, and IEQ metrics in an easily digestible format for facility managers and occupants. These visualizations are critical for monitoring, diagnostics, and decision-making.

Many thanks to our sponsor Focus 360 Energy who helped us prepare this research report.

6. Case Studies

The theoretical underpinnings and technological components of E2DMM are best understood through their practical application. The following case studies illustrate the tangible benefits and sophisticated capabilities of E2DMM in addressing complex building energy optimization challenges.

6.1 Smart Building Energy Optimization with Deep Reinforcement Learning, Physics-Informed Neural Networks, and Blockchain

A pioneering study proposed a highly advanced, multi-faceted framework for smart building energy optimization, embodying the core principles of E2DMM through a novel synergy of cutting-edge technologies (arxiv.org). This framework uniquely integrated:

-

Deep Reinforcement Learning (DRL) Agents: DRL agents were trained using data from high-fidelity digital twins to optimize energy consumption in real-time. The digital twin provided a realistic, simulated environment where the DRL agent could learn optimal control policies through trial and error, without impacting the physical building. The DRL agent’s objective was to minimize energy consumption and cost while maintaining occupant comfort. This ‘learning-in-the-loop’ approach allowed the DRL agent to continuously adapt and improve its control strategies based on simulated and real-world feedback.

-

Physics-Informed Neural Networks (PINNs): To enhance the accuracy, interpretability, and physical consistency of the optimization process, PINNs were seamlessly embedded within the framework. These PINNs were designed to model complex thermal dynamics and energy flows within the building, ensuring that the DRL agent’s control actions adhered to fundamental physical laws (e.g., heat transfer, energy conservation). By providing physics-constrained predictions, the PINNs acted as a ‘smart guardian,’ preventing the DRL agent from suggesting physically unrealistic or inefficient control strategies.

-

Blockchain (BC) Technology: To address critical concerns regarding data security, privacy, and transparent communication across the broader smart grid infrastructure, Blockchain technology was incorporated. BC facilitated secure and immutable logging of energy transactions, control decisions, and renewable energy generation data. This ensured data integrity, provided an auditable trail, and enabled trusted peer-to-peer energy trading within a microgrid context.

The model was meticulously trained and rigorously validated using comprehensive datasets. These datasets encompassed a rich tapestry of information, including granular smart meter energy consumption data, real-time outputs from on-site renewable energy sources (e.g., solar panels), dynamic electricity pricing signals from the grid, and personalized user preferences collected from a network of IoT devices (e.g., comfort ratings, preferred setpoints). The integration of such diverse data streams was crucial for the holistic nature of the E2DMM approach.

The results demonstrated superior predictive performance, with the proposed framework achieving an impressive 97.8% explanation of data variance, indicating an exceptionally high goodness of fit and predictive accuracy. Beyond enhancing energy efficiency, the framework delivered substantial practical benefits: it reduced energy costs by a significant 35%, successfully maintained a high user comfort index of 96% (a critical, often competing objective), and increased renewable energy utilization to 40% of the total energy consumed. This case study powerfully illustrates E2DMM’s capacity to deliver multi-objective optimization, balancing energy savings, cost reduction, occupant satisfaction, and sustainable energy integration through a sophisticated fusion of AI, physics, and distributed ledger technologies.

6.2 Double-Skin Facade Optimization with Explainable Artificial Intelligence

Another compelling application of E2DMM principles involved the design and optimization of a double-skin solar chimney with integrated energy storage components (academic.oup.com). Double-skin facades (DSFs) are advanced building envelopes that consist of two layers of glazing separated by an air cavity, which can be naturally or mechanically ventilated. Solar chimneys leverage buoyancy-driven airflow to enhance natural ventilation and extract heat. The complexity of optimizing such systems, involving intricate heat transfer, airflow dynamics, and material interactions, often necessitates advanced computational approaches.

This study specifically demonstrated the suitability of artificial neural network (ANN) twins as computationally efficient surrogates for complex, high-fidelity CFD (Computational Fluid Dynamics) simulations. Instead of running time-consuming CFD models for every design iteration, the trained ANN twin, functioning as a ‘digital twin surrogate,’ could rapidly predict the performance of the double-skin facade under various design parameters and environmental conditions. This significantly accelerated the design optimization process.

Key aspects of this E2DMM application included:

-

High-Fidelity Modeling: Initial complex CFD simulations were conducted to generate a comprehensive dataset describing the facade’s thermal and airflow performance across a wide range of design parameters (e.g., cavity width, vent sizes, material properties) and operating conditions (e.g., solar radiation, ambient temperature).

-

ANN Twin Creation: A deep learning model (ANN) was trained on this large dataset from the CFD simulations. The trained ANN effectively learned the intricate, non-linear relationships between the input design parameters and the facade’s performance indicators (e.g., ventilation rate, thermal efficiency, temperature distribution). This ANN then served as the computational-efficient ‘twin’ of the complex CFD model.

-

Explainable AI (XAI): A crucial element of this study was the application of explainable artificial intelligence techniques. While the ANN provided accurate predictions, XAI methods were used to uncover why specific design choices led to certain performance outcomes. This enhanced the transparency and interpretability of the AI model, allowing designers to gain insights into the underlying physics and make informed design decisions, rather than blindly following black-box recommendations.

-

Optimization Study: Leveraging the rapid predictive capability of the ANN twin, an extensive optimization study was performed to identify the optimal design configurations for the double-skin solar chimney. The study achieved remarkable accuracy, with a high coefficient of determination (R²) ranging from 0.921 to 0.999 across different performance indicators, signifying an excellent goodness of fit between the ANN twin’s predictions and the actual CFD results.

The optimization results indicated a highly efficient design, achieving a ventilation rate of 2.86 liters per second per person (1/h), which is crucial for indoor air quality, and a thermal efficiency of 37.21% for the solar chimney effect. This case study underscores how E2DMM, through the creation of ‘AI twins’ and the integration of XAI, can streamline complex architectural design processes, leading to highly optimized and energy-efficient building components that are simultaneously well-understood by human designers.

Many thanks to our sponsor Focus 360 Energy who helped us prepare this research report.

7. Challenges and Future Directions

While End-to-End Deep Meta Modeling (E2DMM) presents a transformative approach to building energy optimization, its widespread adoption and full potential realization are still accompanied by several significant challenges. Addressing these hurdles will define the trajectory of future research and development in this domain.

7.1 Data Privacy and Security Concerns

The reliance on vast, granular datasets, particularly those pertaining to occupant behavior, energy consumption patterns, and IEQ metrics, raises substantial privacy and security concerns. Collecting, storing, and processing such sensitive information necessitates robust safeguards.

- Challenge: Ensuring compliance with data protection regulations (e.g., GDPR, CCPA), anonymizing data effectively without compromising its utility, and preventing unauthorized access or breaches of building operational data which could have critical infrastructure implications.

- Future Directions: Research into privacy-preserving machine learning techniques (e.g., federated learning, differential privacy) that allow models to learn from decentralized data without direct access to raw, personal information. The use of blockchain technologies, as explored in one of the case studies, can also enhance data security and auditability, ensuring data integrity and transparent sharing among trusted parties.

7.2 Need for High-Quality and Comprehensive Datasets

The performance of deep learning models and the accuracy of digital twins are directly proportional to the quality, completeness, and diversity of the training data. Real-world building data is often characterized by noise, missing values, inconsistencies, and a lack of semantic interoperability.

- Challenge: Overcoming issues of sensor malfunction, data communication errors, inconsistent labeling, and the proprietary nature of many BMS datasets. There is also a lack of standardized public datasets that span diverse building types, climates, and operational conditions for comprehensive model training and benchmarking.

- Future Directions: Development of robust data imputation and cleaning algorithms specifically tailored for building energy data. Promotion of open-source datasets and common data standards (e.g., Brick Schema, Project Haystack, IFC) to facilitate data sharing and interoperability. Collaborative efforts between academia and industry to create large-scale, anonymized, and well-curated building performance benchmarks.

7.3 Complexity of Integrating Diverse Computational Techniques

E2DMM inherently involves the seamless integration of disparate computational techniques: physics-based simulation, deep learning, optimization algorithms, and real-time control logic. Achieving true ‘end-to-end’ functionality requires sophisticated integration architectures.

- Challenge: Managing the computational resources required for concurrent deep learning model training, real-time BPS, and complex optimization algorithms. Ensuring efficient data exchange between these modules, handling different programming languages and APIs, and orchestrating their synchronized operation can be daunting.

- Future Directions: Development of integrated meta-platforms that abstract away much of this complexity, offering standardized interfaces and robust communication protocols. Research into co-simulation frameworks that efficiently couple different modeling paradigms. Leveraging cloud computing and edge computing resources to distribute computational loads and enable rapid inference at the building level.

7.4 Model Interpretability and Explainability (XAI)

Many deep learning models, particularly complex neural networks, operate as ‘black boxes,’ making it difficult to understand the rationale behind their predictions or recommended actions. This lack of transparency can hinder trust and adoption by building operators and decision-makers.

- Challenge: When an E2DMM system suggests a counter-intuitive control action, operators need to understand why to build confidence and intervene if necessary. Similarly, for fault diagnosis, knowing what caused the fault and how the model reached that conclusion is critical.

- Future Directions: Continued research and application of Explainable AI (XAI) techniques, such as LIME (Local Interpretable Model-agnostic Explanations), SHAP (SHapley Additive exPlanations), or attention mechanisms in neural networks. Developing methods to visualize and articulate the impact of different input features on model predictions, making E2DMM more transparent and trustworthy for human oversight and intervention.

7.5 Scalability and Generalizability

Developing an E2DMM framework for one building is complex; scaling it to a portfolio of diverse buildings or ensuring its generalizability to new, unseen buildings presents a greater challenge.

- Challenge: The significant effort required for data collection, digital twin creation, calibration, and model training for each individual building can be prohibitive. Models trained on one building may not perform well on another due to differences in architecture, systems, climate, or occupancy.

- Future Directions: Research into meta-learning approaches (learning to learn), where models are trained to quickly adapt to new building contexts with minimal data. Development of transfer learning techniques that leverage pre-trained models from large datasets and fine-tune them for specific buildings. Creating modular E2DMM architectures that allow for easy re-configuration and deployment across different building types.

7.6 Integration with Urban-Scale Energy Systems and Smart Grids

The ultimate vision for building energy optimization extends beyond individual buildings to their integration within larger urban energy ecosystems and smart grids.

- Challenge: Coordinating energy demand and generation across multiple buildings, interacting with dynamic electricity grids (including demand response programs and renewable energy integration), and optimizing at a district or city scale while considering complex interdependencies.

- Future Directions: Developing ‘digital twins of twins’ or ‘urban digital twins’ that can model and optimize energy flows across entire districts. Integrating E2DMM with grid-level energy management systems to enable sophisticated demand-side management, virtual power plants, and optimized renewable energy dispatch. Research into multi-agent reinforcement learning for coordinating energy decisions among numerous interdependent buildings.

Many thanks to our sponsor Focus 360 Energy who helped us prepare this research report.

8. Conclusion

End-to-End Deep Meta Modeling (E2DMM) represents a profoundly transformative and necessary evolution in the domain of building energy performance optimization. By ingeniously integrating the predictive power of advanced deep learning algorithms, the real-time simulation capabilities of high-fidelity digital twin technology, and the insights gleaned from vast, heterogeneous datasets, E2DMM offers a comprehensive, adaptive, and intelligent solution framework. This holistic approach is uniquely equipped to address the inherent complexities, dynamic nature, and multifaceted interdependencies that characterize modern building energy systems.

This report has meticulously detailed the foundational computational techniques, elucidated the intricate process of digital twin construction and dynamic utilization, underscored the pivotal role of comprehensive datasets, and outlined the essential software and tools that collectively enable E2DMM. The compelling case studies presented herein unequivocally underscore the practical applicability and profound effectiveness of this integrated approach. From achieving significant reductions in energy costs and enhancing occupant comfort to maximizing the utilization of renewable energy sources, E2DMM has demonstrated its capacity to deliver multi-objective optimization outcomes that were previously unattainable through traditional methods.

While challenges persist, notably in areas such as data privacy, standardization, model interpretability, and scalability, the rapid advancements in artificial intelligence, sensor technologies, and computing infrastructure suggest that these hurdles are surmountable. Future research and collaborative efforts will undoubtedly refine E2DMM methodologies, leading to more robust, efficient, and trustworthy systems. E2DMM is not merely an incremental improvement; it is a paradigm shift paving the way for a new era of truly smart, sustainable, and energy-resilient building operations. Its continued development and widespread implementation are crucial for realizing a more sustainable built environment and achieving global energy and climate objectives.

Many thanks to our sponsor Focus 360 Energy who helped us prepare this research report.

The integration of physics-informed neural networks seems particularly promising. Bridging the gap between data-driven models and established physical principles could significantly enhance the accuracy and reliability of building energy optimization strategies. How might we best validate these hybrid models in real-world scenarios?

Thanks for your insightful comment! Real-world validation of PINNs is crucial. One promising avenue is using A/B testing, deploying PINN-controlled systems in some building zones and comparing performance against traditionally controlled zones. Continuous monitoring and recalibration, informed by real-time data, are also key. This ensures the hybrid models remain accurate despite evolving conditions.

Editor: FocusNews.Uk

Thank you to our Sponsor Focus 360 Energy

Wow, talk about a deep dive! I feel like I need a PhD just to *read* the abstract. But seriously, impressive work! Has anyone considered adding a “chaos monkey” to these digital twins, just to see how energy systems react to completely unpredictable events? For science, of course.

That’s a brilliant idea! A “chaos monkey” approach could reveal vulnerabilities and improve resilience in our energy systems. It would be interesting to see which control strategies could handle truly random disruptions. Perhaps a simulated power surge or equipment failure? We need to try that #DigitalTwins #EnergyEfficiency

Editor: FocusNews.Uk

Thank you to our Sponsor Focus 360 Energy

The discussion of Explainable AI (XAI) in the context of double-skin facade optimization is particularly compelling. Exploring how XAI can demystify the decision-making processes of AI models could foster greater trust and adoption of these technologies in building design and operation.

I agree! The integration of XAI is key for wider acceptance. We’re exploring how to best visualize the AI’s reasoning, perhaps through interactive dashboards that show the influence of various parameters on the double-skin facade’s performance. This could provide valuable insights for designers and operators alike, what are your thoughts?

Editor: FocusNews.Uk

Thank you to our Sponsor Focus 360 Energy

The discussion of data privacy and security is critical. As E2DMM evolves, exploring blockchain for secure data management and federated learning for privacy-preserving model training could foster user trust and encourage wider adoption of these powerful technologies.

Thank you for highlighting the importance of data privacy and security! Your suggestions regarding blockchain and federated learning are spot on. Exploring those avenues will be crucial for building user trust. How do you envision balancing the need for detailed data analysis with robust privacy protection as these systems become more complex?

Editor: FocusNews.Uk

Thank you to our Sponsor Focus 360 Energy

The case study on double-skin facade optimization is fascinating. The use of ANN twins as surrogates for CFD simulations highlights a promising approach to reducing computational costs. Could similar AI surrogate models be developed for other computationally intensive building performance simulations like daylighting or structural analysis?

Thanks for the great question! Absolutely, the ANN twin approach can be extended. We think AI surrogates are promising for daylighting simulations, where complex sky conditions impact results. Structural analysis could also benefit, especially for exploring numerous design options. It would be interesting to see how accuracy compares to traditional methods. #E2DMM

Editor: FocusNews.Uk

Thank you to our Sponsor Focus 360 Energy

Physics-informed neural networks as smart guardians for DRL agents? Genius! So, when can we expect buildings to start arguing with their AI, demanding slightly warmer temperatures despite what the equations say? Asking for a friend who likes wearing shorts in winter.

That’s a funny thought! Maybe we’ll need to add a ‘negotiation module’ to the AI to handle those requests. Perhaps we could use reinforcement learning to see what temperature ranges are acceptable before open revolt breaks out among the occupants! What factors might influence comfort range in your opinion?

Editor: FocusNews.Uk

Thank you to our Sponsor Focus 360 Energy

Given the scalability challenges noted, could generative design techniques be integrated to automate the creation of digital twins for diverse building types, accelerating E2DMM deployment across larger building portfolios?

That’s a great point! Using generative design to automate digital twin creation could definitely address scalability. Imagine AI generating initial twin structures based on building blueprints, then refining them with real-world data. This could significantly speed up deployment across building portfolios. What level of customization do you think would still be necessary?

Editor: FocusNews.Uk

Thank you to our Sponsor Focus 360 Energy

E2DMM sounds like the AI has to learn thermodynamics and also people skills. Can we get a demo where the AI negotiates with a building full of employees who all have different definitions of “comfortable”? I’m imagining tiny AI avatars staging miniature office temperature wars.

That’s a hilarious image! It does highlight the challenge of balancing energy efficiency with individual comfort. Perhaps personalized micro-climate control via smart desks is in our future, where individuals directly influence their immediate surroundings. What other factors might contribute to personal thermal satisfaction?

Editor: FocusNews.Uk

Thank you to our Sponsor Focus 360 Energy

So, buildings are about to start arguing with their AI over temperatures?! Guess I’ll need a new office wardrobe consisting entirely of layers, ready for the inevitable AI-driven climate battles. Anyone taking bets on which floor wins the “thermostat war” first?

That’s a hilarious image! Perhaps the AI could learn from game theory to resolve thermostat disputes fairly. We could imagine an office building, where each floor has a ‘temperature preference score’. Floors could then bid temperature points until a mutually acceptable climate is reached. #SmartBuildings #AIinHVAC

Editor: FocusNews.Uk

Thank you to our Sponsor Focus 360 Energy

The discussion of scalability is important. I wonder about the potential of cloud-based, containerized deployments of E2DMM microservices. Could this approach streamline deployment and management across diverse building portfolios, while reducing the computational burden on individual buildings?

Thanks for raising that point. Containerization and cloud deployment of microservices are a great way to scale up. Imagine each building having a ‘digital twin container’ managed centrally. This could enable real-time updates and resource optimization across many buildings simultaneously, as well as reduced building computational demand.

Editor: FocusNews.Uk

Thank you to our Sponsor Focus 360 Energy

The report mentions the challenge of ensuring models trained on one building can generalize to others. Could transfer learning or domain adaptation techniques be effectively applied to leverage knowledge across diverse building types and operational contexts?

That’s an excellent question! Transfer learning and domain adaptation are definitely key to scaling E2DMM. We’re exploring methods to pre-train models on synthetic data or large, diverse datasets, then fine-tune them with limited data from specific buildings. This should greatly improve generalization and reduce deployment costs. What are your thoughts on synthetic pre-training versus real-world pre-training?

Editor: FocusNews.Uk

Thank you to our Sponsor Focus 360 Energy

The report highlights the need for high-quality datasets. I’m curious about the potential of synthetic data generation, perhaps using generative design or GANs, to augment real-world data and improve model training, particularly for edge cases or scenarios with limited available data.

That’s an interesting point! Synthetic data holds great promise. Perhaps a hybrid approach, where generative models are conditioned on real-world data, could provide the best of both worlds? This could offer increased robustness, especially for buildings with limited sensor deployments.

Editor: FocusNews.Uk

Thank you to our Sponsor Focus 360 Energy

The discussion of data privacy is critical. The integration of federated learning for E2DMM raises interesting possibilities around edge computing, distributing the AI model across multiple buildings to reduce data centralization.

Thanks for emphasizing the importance of edge computing with federated learning! Distributing AI models across buildings can unlock significant benefits. Have you considered the challenge of maintaining model consistency and performance across diverse edge devices with varying computational resources?

Editor: FocusNews.Uk

Thank you to our Sponsor Focus 360 Energy

The discussion on data privacy is essential. Considering differential privacy techniques when training E2DMM models could allow for learning valuable insights while ensuring individual data points are protected. This would greatly facilitate user trust and adoption.

That’s a great suggestion! Differential privacy could be a game-changer for user trust. It is interesting to consider how we can maintain the utility of E2DMM for building optimization while protecting individual privacy. What impact do you see differential privacy having on model accuracy?

Editor: FocusNews.Uk

Thank you to our Sponsor Focus 360 Energy

The discussion on integrating building data with smart grids is exciting. Considering the variability of renewable energy sources, how can E2DMM effectively forecast and manage energy demand to ensure grid stability while maximizing the use of renewables?

Great point about integrating E2DMM with smart grids! The variability of renewables is a key challenge. We’re exploring AI-driven forecasting that considers weather patterns and grid demand to proactively adjust building energy consumption. This could involve dynamically shifting loads or optimizing on-site storage to smooth out demand peaks and support grid stability. How might incentives encourage participation?

Editor: FocusNews.Uk

Thank you to our Sponsor Focus 360 Energy